HASTAC 2011 has posted videos of some of their panels, and I was taken with two points, brought up by Dan Cohen and Tara McPherson as part of the panel “The Future of Digital Publishing,” which can be viewed here.

First, I was taken with Cohen’s final suggestion, that humanities scholars are “terrible economists” because our pursuit of print perfection causes an inordinate investment in the final stages of publication (proof-reading, reformatting notes into periodical-specific styles). As he notes, we have learned to look past such fastidiousness in some web formats, and this indicates that, in a “webby” mode, we are able to relax those standards and still take work seriously.

McPherson’s wide-ranging discussion canvased the new formats and possibilities which digital archives are opening up to us, and she asked Humanities scholars not to cede the task of figuring out how to manage massive data sets to scientific and computer scientific communities. I was particularly caught by the description she gave of a question that keeps her up at night: ten or fifteen years for now, how will young scholars make sense of the wild explosion of publication formats and approaches which archives and DH work have opened up?

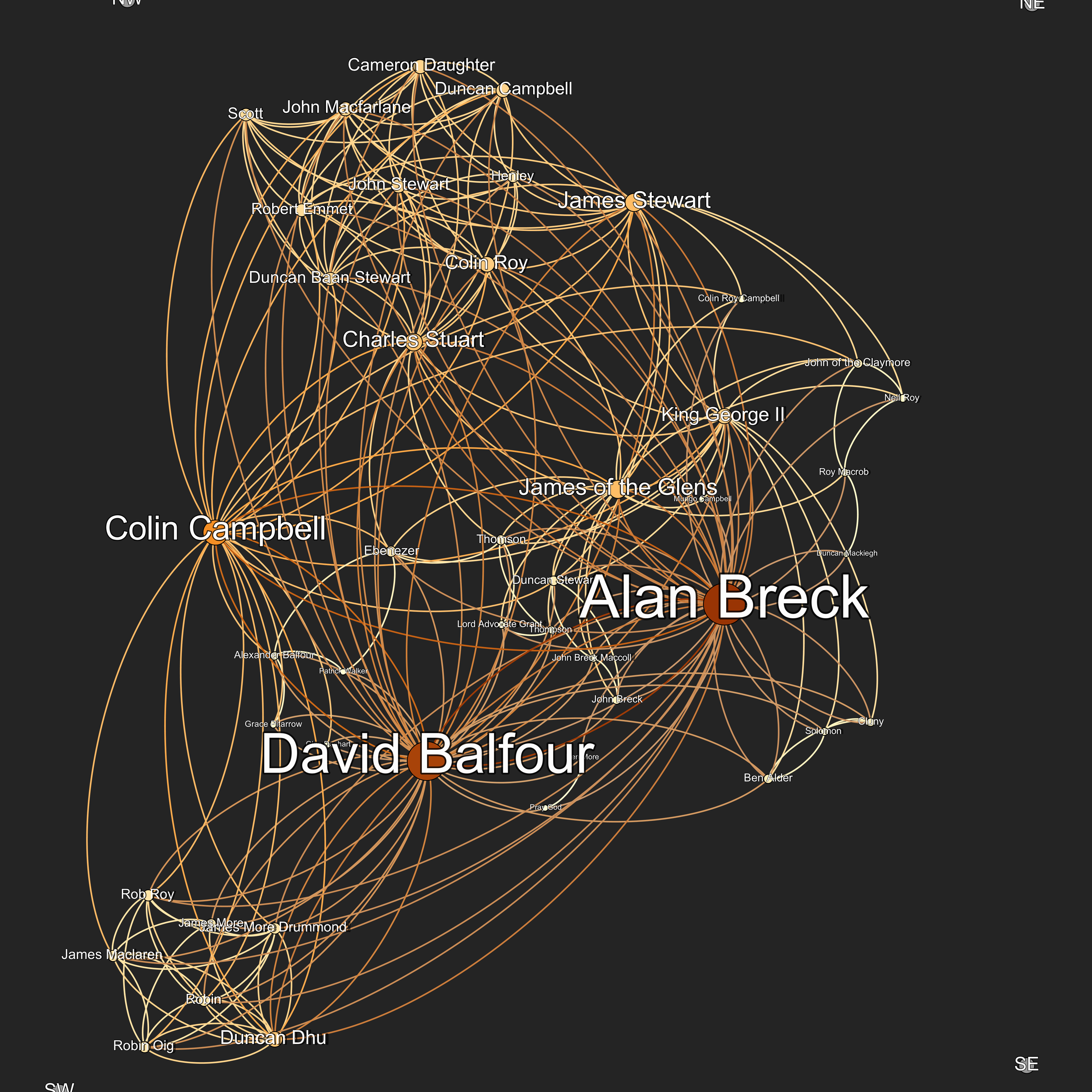

This, for me, raises a third question and perhaps more challenging problem: how will we cultivate scholarly digital literacy? Part of what reinforces the power and importance of print and text-based publication is the high-level textual literacy that humanists develop. I think about how hard it was to develop the specialized literacy it took for me to understand scholarly publishing formats — this demanded a huge evolution in my reading practices, above and beyond what I would describe as my already high-level textual literacy as an undergraduate. When we present something like a simple chart, or even an object as complex as an active network visualization, much less expose users to archives of new material, we tacitly demand some literacy in those formats. Brief textual descriptions and introductions don’t suffice here. Cohen’s observation regarding the relaxed constraints offered by “webby” publishing standards emphasizes the point: we tolerate spelling errors because the effort otherwise put into exhaustive spell-checking is being invested elsewhere, in the aspects of digital scholarship that entail considerable investments in both acculturation and ongoing labor.

I think there is an expectation that great content and great scholarship will cultivate literacy. The iPad certainly shows (as McPherson notes), that a transformative product can drive technical literacy in a way that seems immediate and unreflective — a transformation so profound that it produces what Thomas Kuhn describes as the “gestalt” experience of a new episteme — and the duck becomes a rabbit. And yet, I worry that new technologies and techniques, especially as they are initially developed, pull in precisely the opposite direction. Certainly, this concern weighed on me as I decided which path to pursue in my own work, and I’ve opted primarily for publication in traditional print formats, and the forms of scholarship that would help me achieve that aim. If you watch to the close of the talk, the audience questions are dominated by the problem of tenure and scholarship-evaluation standards. But this is generally cast in terms of accommodation or, alternatively, forcing traditional scholars to change their practices, rather than acknowledging that digital scholarship demands, in effect, a new, and truly complex, set of literacies.